Evaluation results on MM-HELIX across both multimodal and text-only settings. These results underscore the ongoing difficulty MLLMs face with complex, long-chain reflective tasks. Thinking models with reflective reasoning capabilities generally achieve higher scores than those without. Furthermore, a significant modality gap is observed where text-only inputs are superior.

| Model | Thinking | Breakdown by Category | Overall | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Puzzles | Games | Algorithms | Graphs | ||||||||

| Txt | Img | Txt | Img | Txt | Img | Txt | Img | Txt | Img | ||

| Proprietary Models | |||||||||||

| GPT-5 | ✓ | 83.0 | 88.5 | 98.3 | 50.4 | 80.9 | 52.6 | 80.0 | 40.0 | 84.5 | 58.1 |

| Seed-1.5-VL | ✓ | 89.3 | 78.9 | 86.7 | 40.4 | 51.6 | 41.9 | 55.6 | 33.3 | 66.9 | 48.3 |

| o4-mini | ✓ | 76.3 | 50.7 | 95.0 | 42.1 | 69.1 | 45.8 | 66.7 | 35.6 | 75.2 | 44.7 |

| Gemini-2.5-Flash | ✓ | 92.6 | 66.7 | 88.3 | 40.8 | 52.1 | 36.7 | 49.4 | 28.3 | 67.3 | 42.7 |

| GPT-4.1 | ✗ | 61.9 | 44.4 | 73.8 | 35.0 | 30.9 | 16.8 | 13.9 | 8.9 | 43.3 | 25.1 |

| GPT-4o | ✗ | 33.7 | 18.9 | 44.6 | 25.4 | 10.2 | 4.2 | 10.6 | 6.7 | 21.8 | 11.7 |

| Open-Source Models | |||||||||||

| Intern-S1-241B-A28B | ✓ | 75.2 | 69.3 | 76.7 | 30.0 | 35.3 | 23.5 | 26.1 | 15.0 | 50.4 | 33.3 |

| GLM-4.5V-106B-A12B-Thinking | ✓ | 49.6 | 29.3 | 40.4 | 11.3 | 15.3 | 20.2 | 12.2 | 13.9 | 27.0 | 19.5 |

| Kimi-VL-16B-A3B-Thinking-2506 | ✓ | 45.9 | 36.3 | 49.6 | 23.3 | 9.6 | 10.4 | 10.6 | 7.2 | 28.9 | 19.3 |

| GLM-4.1V-9B-Thinking | ✓ | 38.1 | 30.7 | 50.4 | 29.2 | 11.6 | 7.4 | 5.0 | 6.1 | 23.7 | 16.3 |

| Qwen-2.5-VL-72B | ✗ | 24.4 | 18.5 | 42.1 | 25.8 | 8.2 | 3.9 | 5.6 | 7.2 | 20.1 | 13.9 |

| Qwen-2.5-VL-32B | ✗ | 22.2 | 15.2 | 46.3 | 22.5 | 8.1 | 4.7 | 5.6 | 6.7 | 20.6 | 12.3 |

| QVQ-72B-Preview | ✓ | 22.6 | 21.1 | 36.7 | 16.7 | 4.9 | 3.3 | 6.7 | 3.3 | 17.7 | 11.1 |

| MiniCPM-V-4.5-8B | ✓ | 20.0 | 20.0 | 32.1 | 20.8 | 5.8 | 3.7 | 0.0 | 3.3 | 13.0 | 10.4 |

| InternVL3-78B | ✗ | 20.0 | 14.4 | 43.3 | 25.4 | 10.2 | 4.0 | 10.0 | 1.1 | 18.6 | 9.9 |

| InternVL3-38B | ✗ | 19.3 | 14.1 | 40.8 | 22.5 | 8.2 | 3.5 | 7.8 | 5.6 | 16.7 | 9.7 |

| Llama-4-Scout-109B-A17B-16E | ✗ | 24.1 | 16.3 | 40.8 | 21.3 | 4.4 | 4.2 | 2.2 | 1.7 | 15.2 | 9.7 |

| Ovis2-34B | ✗ | 14.4 | 10.4 | 33.8 | 22.1 | 3.9 | 1.2 | 5.0 | 1.7 | 12.0 | 7.2 |

| Gemma-3-27B-IT | ✗ | 20.7 | 10.4 | 44.2 | 22.1 | 6.5 | 0.5 | 5.6 | 1.7 | 16.6 | 6.9 |

| Qwen-2.5-VL-7B | ✗ | 5.6 | 5.9 | 25.4 | 17.9 | 0.4 | 0.4 | 0.6 | 1.1 | 8.0 | 6.3 |

| InternVL3-8B | ✗ | 8.1 | 5.9 | 28.8 | 16.7 | 1.6 | 0.7 | 1.1 | 1.1 | 8.1 | 4.9 |

| Ovis2-8B | ✗ | 7.8 | 3.3 | 24.2 | 15.4 | 0.5 | 0.2 | 1.1 | 0.6 | 6.7 | 3.8 |

| Ours | |||||||||||

| MM-HELIX-7B-Thinking | ✓ | 32.2 | 34.8 | 27.5 | 19.2 | 16.3 | 25.3 | 16.1 | 16.7 | 21.8 | 24.9 |

| Model | 24 | BuySell | Container | Hills | Crypto | HIndex | Rect | LIS | Rain | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Proprietary Models | |||||||||||||||||||

| GPT-5 | 96.7 | 80.0 | 93.3 | 73.3 | 100.0 | 96.7 | 90.0 | 93.3 | 73.3 | ||||||||||

| Seed-1.5-VL | 100.0 | 80.0 | 83.3 | 60.0 | 86.7 | 83.3 | 73.3 | 73.3 | 70.0 | ||||||||||

| o4-mini | 86.7 | 10.0 | 36.7 | 43.3 | 60.0 | 66.7 | 50.0 | 63.3 | 40.0 | ||||||||||

| Gemini-2.5-Flash | 96.7 | 43.3 | 66.7 | 56.7 | 83.3 | 76.7 | 56.7 | 70.0 | 50.0 | ||||||||||

| GPT-4.1 | 63.3 | 46.7 | 56.7 | 16.7 | 26.7 | 60.0 | 33.3 | 43.3 | 53.3 | ||||||||||

| GPT-4o | 10.0 | 30.0 | 23.3 | 0.0 | 0.0 | 30.0 | 23.3 | 33.3 | 20.0 | ||||||||||

| Open-Source Models | |||||||||||||||||||

| Intern-S1-241B-A28B | 86.7 | 80.0 | 70.0 | 83.3 | 63.3 | 46.7 | 66.7 | 83.3 | 43.3 | ||||||||||

| GLM-4.5V-106B-A12B-Thinking | 56.7 | 16.7 | 40.0 | 3.3 | 23.3 | 23.3 | 33.3 | 53.3 | 13.3 | ||||||||||

| Kimi-VL-16B-A3B-Thinking-2506 | 90.0 | 36.7 | 33.3 | 10.0 | 16.7 | 43.3 | 26.7 | 43.3 | 26.7 | ||||||||||

| GLM-4.1V-9B-Thinking | 76.7 | 10.0 | 43.3 | 13.3 | 20.0 | 30.0 | 16.7 | 30.0 | 36.7 | ||||||||||

| Qwen-2.5-VL-72B | 13.3 | 20.0 | 26.7 | 16.7 | 0.0 | 43.3 | 6.7 | 30.0 | 10.0 | ||||||||||

| Qwen-2.5-VL-32B | 33.3 | 26.7 | 16.7 | 0.0 | 3.3 | 16.7 | 3.3 | 26.7 | 10.0 | ||||||||||

| QVQ-72B-Preview | 76.7 | 20.0 | 26.7 | 3.3 | 0.0 | 20.0 | 3.3 | 33.3 | 6.7 | ||||||||||

| MiniCPM-V-4.5-8B | 53.3 | 6.7 | 20.0 | 13.3 | 6.7 | 30.0 | 13.3 | 33.3 | 3.3 | ||||||||||

| InternVL3-78B | 46.7 | 20.0 | 20.0 | 6.7 | 6.7 | 10.0 | 10.0 | 10.0 | 0.0 | ||||||||||

| InternVL3-38B | 43.3 | 3.3 | 23.3 | 3.3 | 3.3 | 13.3 | 3.3 | 26.7 | 6.7 | ||||||||||

| Llama-4-Scout-109B-A17B-16E | 66.7 | 30.0 | 3.3 | 10.0 | 0.0 | 6.7 | 3.3 | 20.0 | 6.7 | ||||||||||

| Ovis2-34B | 23.3 | 0.0 | 3.3 | 6.7 | 0.0 | 20.0 | 13.3 | 26.7 | 0.0 | ||||||||||

| Gemma-3-27B-IT | 10.0 | 0.0 | 13.3 | 3.3 | 0.0 | 23.3 | 10.0 | 30.0 | 3.3 | ||||||||||

| Qwen-2.5-VL-7B | 10.0 | 0.0 | 6.7 | 0.0 | 0.0 | 10.0 | 3.3 | 23.3 | 0.0 | ||||||||||

| InternVL3-8B | 10.0 | 0.0 | 6.7 | 3.3 | 0.0 | 10.0 | 0.0 | 23.3 | 0.0 | ||||||||||

| Ovis2-8B | 13.3 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 0.0 | 6.7 | 0.0 | ||||||||||

| Ours | |||||||||||||||||||

| MM-HELIX-7B-Thinking | 56.7 | 30.0 | 46.7 | 40.0 | 10.0 | 46.7 | 26.7 | 43.3 | 13.3 | ||||||||||

| Model | EulerCyc | EulerPath | GraphIso | HamilCyc | HamilPath | MaxFlow | ShortDist | TopoSort | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Proprietary Models | |||||||||||||||||||

| GPT-5 | 33.3 | 33.3 | 53.3 | 40.0 | 60.0 | 80.0 | 90.0 | 13.3 | |||||||||||

| Seed-1.5-VL | 23.3 | 30.0 | 56.7 | 23.3 | 46.7 | 70.0 | 60.0 | 13.3 | |||||||||||

| o4-mini | 33.3 | 33.3 | 53.3 | 33.3 | 50.0 | 66.7 | 56.7 | 10.0 | |||||||||||

| Gemini-2.5-Flash | 30.0 | 36.7 | 43.3 | 26.7 | 46.7 | 63.3 | 66.7 | 13.3 | |||||||||||

| GPT-4.1 | 10.0 | 20.0 | 63.3 | 20.0 | 33.3 | 70.0 | 60.0 | 3.3 | |||||||||||

| GPT-4o | 6.7 | 26.7 | 56.7 | 16.7 | 20.0 | 33.3 | 43.3 | 0.0 | |||||||||||

| Open-Source Models | |||||||||||||||||||

| Intern-S1-241B-A28B | 16.7 | 26.7 | 50.0 | 16.7 | 23.3 | 50.0 | 56.7 | 0.0 | |||||||||||

| GLM-4.5V-106B-A12B-Thinking | 0.0 | 10.0 | 6.7 | 10.0 | 20.0 | 30.0 | 13.3 | 0.0 | |||||||||||

| Kimi-VL-16B-A3B-Thinking-2506 | 16.7 | 20.0 | 46.7 | 16.7 | 26.7 | 40.0 | 20.0 | 0.0 | |||||||||||

| GLM-4.1V-9B-Thinking | 16.7 | 23.3 | 46.7 | 16.7 | 33.3 | 50.0 | 43.3 | 3.3 | |||||||||||

| Qwen-2.5-VL-72B | 16.7 | 23.3 | 56.7 | 10.0 | 20.0 | 43.3 | 36.7 | 0.0 | |||||||||||

| Qwen-2.5-VL-32B | 13.3 | 20.0 | 30.0 | 16.7 | 23.3 | 40.0 | 36.7 | 0.0 | |||||||||||

| QVQ-72B-Preview | 16.7 | 16.7 | 36.7 | 6.7 | 13.3 | 20.0 | 20.0 | 3.3 | |||||||||||

| MiniCPM-V-4.5-8B | 6.7 | 23.3 | 40.0 | 20.0 | 16.7 | 26.7 | 30.0 | 3.3 | |||||||||||

| InternVL3-78B | 10.0 | 20.0 | 46.7 | 16.7 | 26.7 | 40.0 | 40.0 | 3.3 | |||||||||||

| InternVL3-38B | 10.0 | 23.3 | 46.7 | 16.7 | 13.3 | 33.3 | 36.7 | 0.0 | |||||||||||

| Llama-4-Scout-109B-A17B-16E | 16.7 | 26.7 | 43.3 | 10.0 | 23.3 | 26.7 | 20.0 | 3.3 | |||||||||||

| Ovis2-34B | 16.7 | 23.3 | 53.3 | 23.3 | 16.7 | 23.3 | 13.3 | 6.7 | |||||||||||

| Gemma-3-27B-IT | 16.7 | 26.7 | 33.3 | 16.7 | 23.3 | 36.7 | 20.0 | 3.3 | |||||||||||

| Qwen-2.5-VL-7B | 10.0 | 23.3 | 53.3 | 0.0 | 13.3 | 23.3 | 20.0 | 0.0 | |||||||||||

| InternVL3-8B | 13.3 | 26.7 | 33.3 | 16.7 | 23.3 | 6.7 | 13.3 | 0.0 | |||||||||||

| Ovis2-8B | 16.7 | 10.0 | 26.7 | 23.3 | 13.3 | 16.7 | 16.7 | 0.0 | |||||||||||

| Ours | |||||||||||||||||||

| MM-HELIX-7B-Thinking | 16.7 | 23.3 | 20.0 | 10.0 | 26.7 | 26.7 | 30.0 | 3.3 | |||||||||||

| Model | Aqua | Bina | Brid | Calcu | Camp | Eule | Futo | Hito | Kaku | Kuku | Nono | Num | Shin | Sky | Snak | Sudo | Tapa | WLad | WSch |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Proprietary Models | |||||||||||||||||||

| GPT-5 | 33.3 | 23.3 | 83.3 | 30.0 | 63.3 | 53.3 | 33.3 | 83.3 | 26.7 | 100.0 | 26.7 | 86.7 | 80.0 | 53.3 | 10.0 | 26.7 | 50.0 | 36.7 | 100.0 |

| Seed-1.5-VL | 10.0 | 30.0 | 50.0 | 16.7 | 86.7 | 60.0 | 20.0 | 40.0 | 36.7 | 63.3 | 3.3 | 6.7 | 70.0 | 40.0 | 100.0 | 50.0 | 33.3 | 20.0 | 60.0 |

| o4-mini | 26.7 | 13.3 | 73.3 | 23.3 | 53.3 | 50.0 | 30.0 | 43.3 | 43.3 | 76.7 | 13.3 | 50.0 | 43.3 | 43.3 | 96.7 | 3.3 | 43.3 | 30.0 | 100.0 |

| Gemini-2.5-Flash | 3.3 | 20.0 | 60.0 | 3.3 | 46.7 | 46.7 | 16.7 | 63.3 | 36.7 | 40.0 | 0.0 | 40.0 | 40.0 | 40.0 | 83.3 | 40.0 | 36.7 | 10.0 | 70.0 |

| GPT-4.1 | 3.3 | 0.0 | 46.7 | 13.3 | 13.3 | 33.3 | 10.0 | 40.0 | 16.7 | 60.0 | 0.0 | 16.7 | 53.3 | 20.0 | 43.3 | 0.0 | 3.3 | 10.0 | 33.3 |

| GPT-4o | 0.0 | 20.0 | 0.0 | 3.3 | 16.7 | 20.0 | 10.0 | 16.7 | 13.3 | 33.3 | 0.0 | 0.0 | 6.7 | 3.3 | 33.3 | 0.0 | 0.0 | 13.3 | 13.3 |

| Open-Source Models | |||||||||||||||||||

| Intern-S1-241B-A28B | 3.3 | 23.3 | 60.0 | 26.7 | 20.0 | 16.7 | 20.0 | 20.0 | 30.0 | 0.0 | 0.0 | 26.7 | 13.3 | 23.3 | 53.3 | 53.3 | 16.7 | 0.0 | 43.3 |

| GLM-4.5V-106B-A12B-Thinking | 13.3 | 30.0 | 13.3 | 6.7 | 60.0 | 6.7 | 6.7 | 30.0 | 0.0 | 33.3 | 0.0 | 0.0 | 0.0 | 6.7 | 40.0 | 20.0 | 6.7 | 6.7 | 50.0 |

| Kimi-VL-16B-A3B-Thinking-2506 | 3.3 | 16.7 | 20.0 | 6.7 | 16.7 | 13.3 | 26.7 | 13.3 | 16.7 | 10.0 | 0.0 | 10.0 | 3.3 | 6.7 | 50.0 | 10.0 | 0.0 | 0.0 | 26.7 |

| GLM-4.1V-9B-Thinking | 6.7 | 16.7 | 6.7 | 13.3 | 40.0 | 10.0 | 3.3 | 16.7 | 13.3 | 20.0 | 0.0 | 10.0 | 0.0 | 3.3 | 30.0 | 3.3 | 6.7 | 0.0 | 0.0 |

| Qwen-2.5-VL-72B | 0.0 | 6.7 | 13.3 | 10.0 | 23.3 | 16.7 | 10.0 | 6.7 | 0.0 | 6.7 | 0.0 | 6.7 | 16.7 | 3.3 | 13.3 | 6.7 | 0.0 | 0.0 | 10.0 |

| Qwen-2.5-VL-32B | 3.3 | 0.0 | 10.0 | 3.3 | 6.7 | 3.3 | 16.7 | 0.0 | 6.7 | 13.3 | 0.0 | 6.7 | 3.3 | 3.3 | 16.7 | 3.3 | 0.0 | 0.0 | 3.3 |

| QVQ-72B-Preview | 10.0 | 13.3 | 6.7 | 6.7 | 6.7 | 6.7 | 16.7 | 10.0 | 13.3 | 0.0 | 0.0 | 0.0 | 6.7 | 0.0 | 0.0 | 0.0 | 0.0 | 6.7 | 10.0 |

| MiniCPM-V-4.5-8B | 6.7 | 3.3 | 10.0 | 10.0 | 20.0 | 10.0 | 20.0 | 6.7 | 6.7 | 6.7 | 0.0 | 0.0 | 0.0 | 0.0 | 6.7 | 0.0 | 0.0 | 0.0 | 16.7 |

| InternVL3-78B | 0.0 | 0.0 | 30.0 | 26.7 | 3.3 | 3.3 | 6.7 | 3.3 | 0.0 | 13.3 | 0.0 | 0.0 | 6.7 | 3.3 | 6.7 | 0.0 | 0.0 | 3.3 | 0.0 |

| InternVL3-38B | 3.3 | 3.3 | 16.7 | 20.0 | 0.0 | 3.3 | 13.3 | 10.0 | 6.7 | 10.0 | 0.0 | 0.0 | 3.3 | 3.3 | 3.3 | 0.0 | 0.0 | 0.0 | 3.3 |

| Llama-4-Scout-109B-A17B-16E | 6.7 | 10.0 | 13.3 | 3.3 | 30.0 | 16.7 | 3.3 | 23.3 | 10.0 | 3.3 | 0.0 | 0.0 | 20.0 | 3.3 | 13.3 | 0.0 | 0.0 | 6.7 | 16.7 |

| Ovis2-34B | 13.3 | 30.0 | 6.7 | 13.3 | 6.7 | 13.3 | 30.0 | 10.0 | 0.0 | 10.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 3.3 | 3.3 | 0.0 | 0.0 |

| Gemma-3-27B-IT | 6.7 | 3.3 | 10.0 | 3.3 | 0.0 | 0.0 | 6.7 | 13.3 | 10.0 | 3.3 | 0.0 | 0.0 | 3.3 | 0.0 | 3.3 | 0.0 | 0.0 | 0.0 | 10.0 |

| Qwen-2.5-VL-7B | 13.3 | 0.0 | 3.3 | 0.0 | 6.7 | 0.0 | 10.0 | 6.7 | 10.0 | 16.7 | 0.0 | 0.0 | 0.0 | 0.0 | 3.3 | 0.0 | 0.0 | 6.7 | 3.3 |

| InternVL3-8B | 6.7 | 0.0 | 3.3 | 16.7 | 10.0 | 0.0 | 10.0 | 3.3 | 6.7 | 0.0 | 0.0 | 0.0 | 0.0 | 3.3 | 0.0 | 0.0 | 6.7 | 0.0 | 3.3 |

| Ovis2-8B | 0.0 | 10.0 | 0.0 | 0.0 | 0.0 | 6.7 | 3.3 | 10.0 | 0.0 | 3.3 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 3.3 | 6.7 | 0.0 | 6.7 |

| Ours | |||||||||||||||||||

| MM-HELIX-7B-Thinking | 3.3 | 23.3 | 50.0 | 6.7 | 46.7 | 43.3 | 20.0 | 13.3 | 30.0 | 26.7 | 13.3 | 23.3 | 60.0 | 20.0 | 16.7 | 30.0 | 6.7 | 6.7 | 40.0 |

| Model | 24 | BuySell | Container | Hills | Crypto | HIndex | Rect | LIS | Rain | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Proprietary Models | |||||||||||||||||||

| GPT-5 | 96.7 | 80.0 | 93.3 | 73.3 | 100.0 | 96.7 | 90.0 | 93.3 | 73.3 | ||||||||||

| Seed-1.5-VL | 100.0 | 80.0 | 83.3 | 60.0 | 86.7 | 83.3 | 73.3 | 73.3 | 70.0 | ||||||||||

| o4-mini | 86.7 | 10.0 | 36.7 | 43.3 | 60.0 | 66.7 | 50.0 | 63.3 | 40.0 | ||||||||||

| Gemini-2.5-Flash | 96.7 | 43.3 | 66.7 | 56.7 | 83.3 | 76.7 | 56.7 | 70.0 | 50.0 | ||||||||||

| GPT-4.1 | 63.3 | 46.7 | 56.7 | 16.7 | 26.7 | 60.0 | 33.3 | 43.3 | 53.3 | ||||||||||

| GPT-4o | 10.0 | 30.0 | 23.3 | 0.0 | 0.0 | 30.0 | 23.3 | 33.3 | 20.0 | ||||||||||

| Open-Source Models | |||||||||||||||||||

| Intern-S1-241B-A28B | 86.7 | 80.0 | 70.0 | 83.3 | 63.3 | 46.7 | 66.7 | 83.3 | 43.3 | ||||||||||

| GLM-4.5V-106B-A12B-Thinking | 56.7 | 16.7 | 40.0 | 3.3 | 23.3 | 23.3 | 33.3 | 53.3 | 13.3 | ||||||||||

| Kimi-VL-16B-A3B-Thinking-2506 | 90.0 | 36.7 | 33.3 | 10.0 | 16.7 | 43.3 | 26.7 | 43.3 | 26.7 | ||||||||||

| GLM-4.1V-9B-Thinking | 76.7 | 10.0 | 43.3 | 13.3 | 20.0 | 30.0 | 16.7 | 30.0 | 36.7 | ||||||||||

| Qwen-2.5-VL-72B | 13.3 | 20.0 | 26.7 | 16.7 | 0.0 | 43.3 | 6.7 | 30.0 | 10.0 | ||||||||||

| Qwen-2.5-VL-32B | 33.3 | 26.7 | 16.7 | 0.0 | 3.3 | 16.7 | 3.3 | 26.7 | 10.0 | ||||||||||

| QVQ-72B-Preview | 76.7 | 20.0 | 26.7 | 3.3 | 0.0 | 20.0 | 3.3 | 33.3 | 6.7 | ||||||||||

| MiniCPM-V-4.5-8B | 53.3 | 6.7 | 20.0 | 13.3 | 6.7 | 30.0 | 13.3 | 33.3 | 3.3 | ||||||||||

| InternVL3-78B | 46.7 | 20.0 | 20.0 | 6.7 | 6.7 | 10.0 | 10.0 | 10.0 | 0.0 | ||||||||||

| InternVL3-38B | 43.3 | 3.3 | 23.3 | 3.3 | 3.3 | 13.3 | 3.3 | 26.7 | 6.7 | ||||||||||

| Llama-4-Scout-109B-A17B-16E | 66.7 | 30.0 | 3.3 | 10.0 | 0.0 | 6.7 | 3.3 | 20.0 | 6.7 | ||||||||||

| Ovis2-34B | 23.3 | 0.0 | 3.3 | 6.7 | 0.0 | 20.0 | 13.3 | 26.7 | 0.0 | ||||||||||

| Gemma-3-27B-IT | 10.0 | 0.0 | 13.3 | 3.3 | 0.0 | 23.3 | 10.0 | 30.0 | 3.3 | ||||||||||

| Qwen-2.5-VL-7B | 10.0 | 0.0 | 6.7 | 0.0 | 0.0 | 10.0 | 3.3 | 23.3 | 0.0 | ||||||||||

| InternVL3-8B | 10.0 | 0.0 | 6.7 | 3.3 | 0.0 | 10.0 | 0.0 | 23.3 | 0.0 | ||||||||||

| Ovis2-8B | 13.3 | 0.0 | 0.0 | 0.0 | 0.0 | 10.0 | 0.0 | 6.7 | 0.0 | ||||||||||

| Ours | |||||||||||||||||||

| MM-HELIX-7B-Thinking | 56.7 | 30.0 | 46.7 | 40.0 | 10.0 | 46.7 | 26.7 | 43.3 | 13.3 | ||||||||||

| Model | Maze | Mine | Nib | Slide | Soko | Hanoi | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Proprietary Models | |||||||||||||||||||

| GPT-5 | 10.0 | 23.3 | 10.0 | 86.7 | 16.7 | 93.3 | |||||||||||||

| Seed-1.5-VL | 6.7 | 53.3 | 20.0 | 63.3 | 3.3 | 53.3 | |||||||||||||

| o4-mini | 6.7 | 26.7 | 10.0 | 66.7 | 13.3 | 90.0 | |||||||||||||

| Gemini-2.5-Flash | 0.0 | 50.0 | 13.3 | 46.7 | 3.3 | 56.7 | |||||||||||||

| GPT-4.1 | 3.3 | 0.0 | 0.0 | 3.3 | 0.0 | 46.7 | |||||||||||||

| GPT-4o | 0.0 | 0.0 | 0.0 | 3.3 | 0.0 | 36.7 | |||||||||||||

| Open-Source Models | |||||||||||||||||||

| Intern-S1-241B-A28B | 0.0 | 20.0 | 0.0 | 36.7 | 0.0 | 33.3 | |||||||||||||

| GLM-4.5V-106B-A12B-Thinking | 0.0 | 16.7 | 3.3 | 10.0 | 3.3 | 50.0 | |||||||||||||

| Kimi-VL-16B-A3B-Thinking-2506 | 0.0 | 3.3 | 0.0 | 3.3 | 0.0 | 10.0 | |||||||||||||

| GLM-4.1V-9B-Thinking | 0.0 | 0.0 | 3.3 | 3.3 | 0.0 | 26.7 | |||||||||||||

| Qwen-2.5-VL-72B | 0.0 | 20.0 | 0.0 | 36.7 | 3.3 | 26.7 | |||||||||||||

| Qwen-2.5-VL-32B | 0.0 | 16.7 | 3.3 | 33.3 | 0.0 | 6.7 | |||||||||||||

| QVQ-72B-Preview | 0.0 | 3.3 | 3.3 | 6.7 | 0.0 | 16.7 | |||||||||||||

| MiniCPM-V-4.5-8B | 0.0 | 0.0 | 0.0 | 3.3 | 3.3 | 13.3 | |||||||||||||

| InternVL3-78B | 0.0 | 0.0 | 3.3 | 6.7 | 0.0 | 16.7 | |||||||||||||

| InternVL3-38B | 0.0 | 3.3 | 3.3 | 10.0 | 6.7 | 13.3 | |||||||||||||

| Llama-4-Scout-109B-A17B-16E | 0.0 | 3.3 | 0.0 | 10.0 | 0.0 | 33.3 | |||||||||||||

| Ovis2-34B | 0.0 | 0.0 | 0.0 | 3.3 | 0.0 | 6.7 | |||||||||||||

| Gemma-3-27B-IT | 0.0 | 0.0 | 0.0 | 3.3 | 0.0 | 3.3 | |||||||||||||

| Qwen-2.5-VL-7B | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 3.3 | |||||||||||||

| InternVL3-8B | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 6.7 | |||||||||||||

| Ovis2-8B | 0.0 | 0.0 | 0.0 | 0.0 | 3.3 | 3.3 | |||||||||||||

| Ours | |||||||||||||||||||

| MM-HELIX-7B-Thinking | 3.3 | 16.7 | 23.3 | 26.7 | 3.3 | 26.7 | |||||||||||||

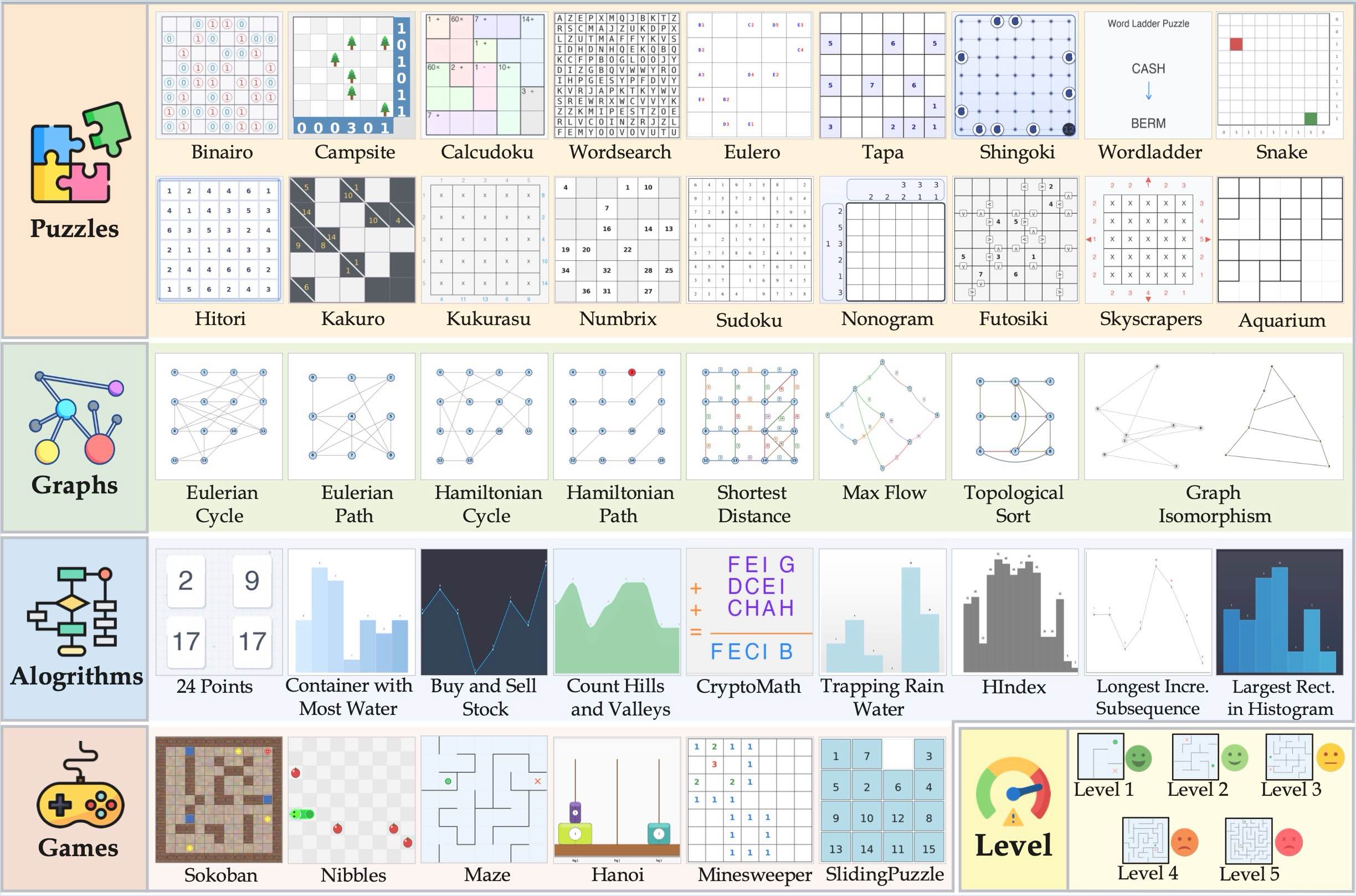

MM-HELIX:

MM-HELIX:

Overall

Overall

Algorithms

Algorithms

Graphs

Graphs

Puzzles

Puzzles

Games

Games

Let's Try MM-HELIX Benchmark!

Let's Try MM-HELIX Benchmark!

Dataset Visualization

Dataset Visualization